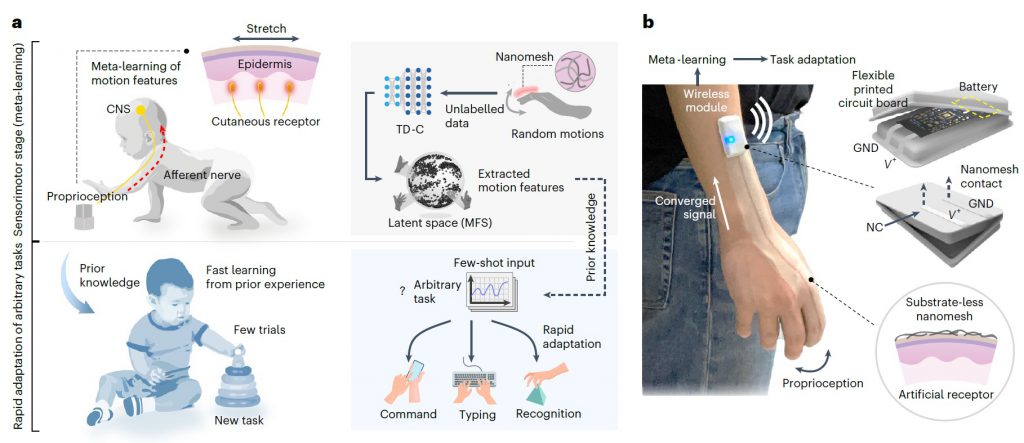

We report a substrate-less nanomesh receptor that is coupled with an unsupervised meta-learning framework and can provide user-independent, data-efficient recognition of different hand tasks. The nanomesh, which is made from biocompatible materials and can be directly printed on a person’s hand, mimics human cutaneous receptors by translating electrical resistance changes from fine skin stretches into proprioception. A single nanomesh can simultaneously measure finger movements from multiple joints, providing a simple user implementation and low computational cost. We also develop a time-dependent contrastive learning algorithm that can differentiate between different unlabelled motion signals. This

meta-learned information is then used to rapidly adapt to various users and tasks, including command recognition, keyboard typing and object recognition.

IEEE Spectrum : Spray-on smark skin reads typing and hand gestures

Related publications

1. K Kim et al, A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition, Nature Electronics, 39, Dec 2022 [LINK] [EXTENDED DATA] [SUPPLEMENT] [CODE]