We propose a wearable hybrid interface where eye movements and mental concentration directly influence the control of a quadcopter in three-dimensional space. This noninvasive and low-cost interface addresses limitations in previous work by supporting users to complete their complicated tasks in a constrained environment in which only visual feedback is provided. The combination of the two inputs augments the number of control commands to enable the flying robot to travel in eight different directions within the physical environment. Five human subjects participated in the experiments to test the feasibility of the hybrid interface. A front view camera on the hull of the quadcopter provided the only visual feedback to each remote subject on a laptop display. Based on the visual feedback, the subjects used the interface to navigate along pre-set target locations in the air. The flight performance was evaluated comparing with a keyboard-based interface. We demonstrate the applicability of the hybrid interface to explore and interact with a three-dimensional physical space through a flying robot.

[KAIST AI를 선도한다]조성호 전산학부 교수, 뇌로 드론 제어하는 기술 연구 [LINK]

월드컵 개막전 ‘뇌파시축’ [LINK]

Related publications

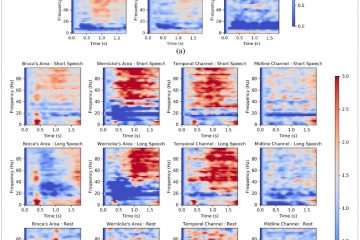

1. B Kim, M Kim, S Jo, Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking, Computers in Biology and Medicine, 51:82-92, 2014. Selected as an Honorable Mention Paper, Computers in Biology and Medicine, 2014. [LINK] [PDF]